OpenVPN is required for CloudBlue Commerce to communicate with its extensions.

OpenVPN is necessary for:

- connectivity between CloudBlue Commerce Management Node and the AKS cluster.

- connectivity between UI nodes and the AKS cluster when the UI is deployed outside the AKS cluster.

To connect the AKS services subnet with CloudBlue Commerce Management Node, the OpenVPN helm package is deployed inside the AKS cluster. As a result, CloudBlue Commerce can use the AKS DNS and access its extensions.

When the UI is deployed to virtual machines rather than as a Helm package inside the AKS cluster, all UI nodes must also be connected to the AKS services subnet using OpenVPN.

Prerequisites

Deployment Procedure

To install the OpenVPN helm package:

Note: In the steps below, we use example values and assume that AKS was deployed with the following IP ranges:

• POD subnet: 10.244.0.0/16

• Service subnet: 10.0.0.0/16

-

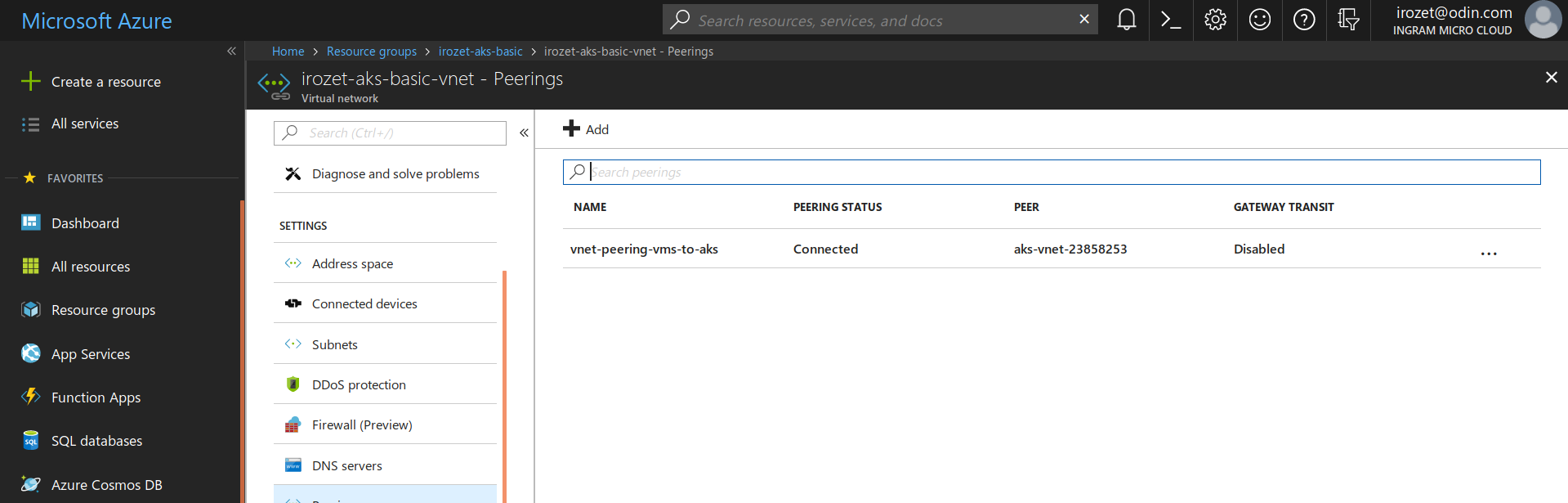

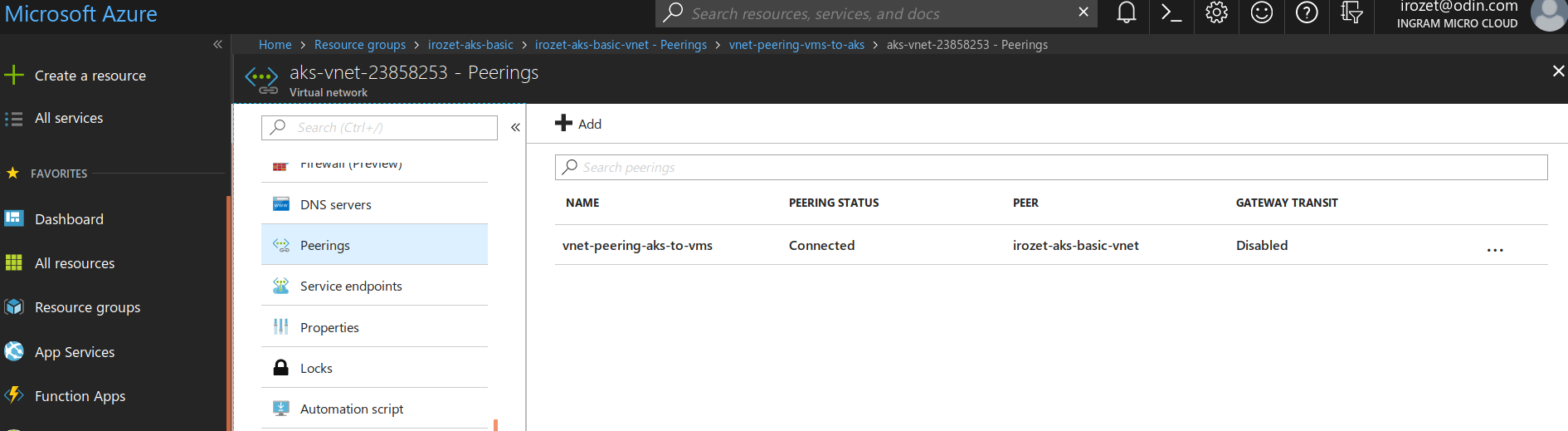

Configure VNet peering in Azure between IAAS VNet and AKS VNet (see the screenshots below).

Note: For a Basic AKS network in Azure, to ensure connectivity between the VPN load balancer and VPN clients installed to CloudBlue Commerce Management Node and UI nodes, it is necessary to configure VNet peering between AKS and the CloudBlue Commerce Management Node VNet in both directions: from AKS to the MN VNet and from the MN VNet to AKS.

-

Install the OpenVPN client package on CloudBlue Commerce Management Node and all UI nodes by running these commands on each node:

yum install -y epel-release yum install -y openvpn yum remove -y epel-release

-

Install the OpenVPN server package inside the AKS cluster:

Note: OpenVPN installation requires OpenVPN server access certificates to be passed to the installation script among OpenVPN chart values. To obtain such certificates, a temporary OpenVPN installation is created during which the certificates are automatically generated. After that, a permanent OpenVPN installation is created.

-

Perform a temporary installation of the OpenVPN chart:

$ cat << EOF > ovpn_proto_values.yaml service: externalPort: 1195 internalPort: 1195 annotations: service.beta.kubernetes.io/azure-load-balancer-internal: "true" persistence: enabled: false openvpn: redirectGateway: EOF $ helm install stable/openvpn --wait --name openvpn-proto --values ovpn_proto_values.yaml -

Find the name of the installed POD to use it in subsequent commands:

$ kubectl get pod | grep openvpn-proto openvpn-proto-69dd76456d-qn87l 1/1 Running 0 2m $ pod_name=$(kubectl get pod | grep openvpn-proto | awk '{print $1}') $ echo $pod_name openvpn-proto-69dd76456d-qn87l -

Check to make sure that OpenVPN server access certificates have been generated by searching for the

/etc/openvpn/certs/pki/dh.pemfile in the POD:Note: Server certificates are generated automatically after the POD installation is complete. The certificate generation can take significant time (several minutes).

$ kubectl exec $pod_name find /etc/openvpn/certs/pki/dh.pem /etc/openvpn/certs/pki/dh.pem

-

Create OpenVPN client configs for CloudBlue Commerce Management Node and all UI nodes:

Note: The client configs must be unique for each node.

-

Find the external IP of the OpenVPN service:

service_ip=$(kubectl get service openvpn-proto -o jsonpath='{.status.loadBalancer.ingress[0].ip}') -

For each node, generate a client certificate by running the commands below on CloudBlue Commerce Management Node. In the example below,

iaas2k8sis the OpenVPN client service profile name.$ kubectl exec ${pod_name} /etc/openvpn/setup/newClientCert.sh "iaas2k8smn1" "${service_ip}" $ kubectl cp ${pod_name}:/etc/openvpn/certs/pki/iaas2k8smn1.ovpn iaas2k8s_mn_1.conf $ kubectl exec ${pod_name} /etc/openvpn/setup/newClientCert.sh "iaas2k8smn2" "${service_ip}" $ kubectl cp ${pod_name}:/etc/openvpn/certs/pki/iaas2k8smn2.ovpn iaas2k8s_mn_2.conf $ kubectl exec ${pod_name} /etc/openvpn/setup/newClientCert.sh "iaas2k8sui1" "${service_ip}" $ kubectl cp ${pod_name}:/etc/openvpn/certs/pki/iaas2k8sui1.ovpn iaas2k8s_ui_1.conf $ kubectl exec ${pod_name} /etc/openvpn/setup/newClientCert.sh "iaas2k8sui2" "${service_ip}" $ kubectl cp ${pod_name}:/etc/openvpn/certs/pki/iaas2k8sui2.ovpn iaas2k8s_ui_2.conf -

On each node:

-

Copy the corresponding certificate to

/etc/openvpn/iaas2k8s.conf -

To ensure that all clients are reconnected within a short period (for example, 10 seconds) in case the OpenVPN server POD is restarted, add the following setting to

/etc/openvpn/iaas2k8s.conf:connect-timeout 10

-

-

-

Copy the server certificates from the POD and include them into an AKS secret. Then create a values file for the OpenVPN deployment (adjust the IP ranges, if necessary):

kubectl exec -ti $pod_name cat /etc/openvpn/certs/pki/private/server.key > server.key kubectl exec -ti $pod_name cat /etc/openvpn/certs/pki/ca.crt > ca.crt kubectl exec -ti $pod_name cat /etc/openvpn/certs/pki/issued/server.crt > server.crt kubectl exec -ti $pod_name cat /etc/openvpn/certs/pki/dh.pem > dh.pem kubectl exec -ti $pod_name cat /etc/openvpn/certs/pki/ta.key > ta.key kubectl create secret generic openvpn-keys-secret --from-file=./server.key --from-file=./ca.crt --from-file=./server.crt --from-file=./dh.pem --from-file=./ta.key kubectl get secret openvpn-keys-secret -o yaml > openvpn-keys-secret.yaml cat << EOF > ovpn_values.yaml service: externalPort: 1195 internalPort: 1195 loadBalancerIP: $service_ip annotations: service.beta.kubernetes.io/azure-load-balancer-internal: "true" persistence: enabled: false replicaCount: 2 ipForwardInitContainer: true openvpn: redirectGateway: OVPN_NETWORK: 10.241.0.0 OVPN_SUBNET: 255.255.0.0 OVPN_K8S_POD_NETWORK: 10.244.0.0 OVPN_K8S_POD_SUBNET: 255.255.0.0 OVPN_K8S_SVC_NETWORK: 10.0.0.0 OVPN_K8S_SVC_SUBNET: 255.255.0.0 DEFAULT_ROUTE_ENABLED: false keystoreSecret: "openvpn-keys-secret" EOF -

Remove the temporary OpenVPN installation:

helm delete openvpn-proto --purge

-

Install the OpenVPN package (provide the values from the values file created above):

helm install stable/openvpn --wait --name internal-vpn --values ovpn_values.yaml

-

-

On each OpenVPN client (on CloudBlue Commerce Management Node and all UI nodes), enable the VPN connection auto-start on boot time; then start the OpenVPN client service:

systemctl enable openvpn@iaas2k8s systemctl start openvpn@iaas2k8s

-

Check to make sure that the VPN connection works; for an active VPN connection you will receive a response similar to this one:

[root@linmn01 cloudblue]# systemctl status openvpn@iaas2k8s --lines 0 ● openvpn@iaas2k8s.service - OpenVPN Robust And Highly Flexible Tunneling Application On iaas2k8s Loaded: loaded (/usr/lib/systemd/system/openvpn@.service; enabled; vendor preset: disabled) Active: active (running) since Fri 2018-09-07 18:12:39 UTC; 1h 45min ago Main PID: 71444 (openvpn) Status: "Initialization Sequence Completed" CGroup: /system.slice/system-openvpn.slice/openvpn@iaas2k8s.service └─71444 /usr/sbin/openvpn --cd /etc/openvpn/ --config iaas2k8s.conf [root@linmn01 cloudblue]# nslookup kubernetes.default.svc.cluster.local 10.0.0.10 Server: 10.0.0.10 Address: 10.0.0.10#53 Non-authoritative answer: Name: kubernetes.default.svc.cluster.local Address: 10.0.0.1 [root@linmn01 cloudblue]# ip route get 10.0.0.1 10.0.0.1 via 10.241.0.9 dev tun0 src 10.241.0.10 cache