Before upgrading CloudBlue Commerce to version 21.0, complete these steps:

-

Step 1. Perform a precheck for your current CloudBlue Commerce installation.

Important:

- Performing a CloudBlue Commerce installation precheck is a mandatory manual procedure. It is not launched automatically during the upgrade.

- The report from a CloudBlue Commerce installation precheck must be attached to each post-upgrade issue when contacting CloudBlue Commerce support. -

Step 2. Perform a precheck for your CloudBlue Commerce databases.

Important:

- Performing a CloudBlue Commerce database precheck is a mandatory manual procedure. It is not launched automatically during the upgrade.

- The report from a CloudBlue Commerce database precheck must be attached to each post-upgrade issue when contacting CloudBlue Commerce support.

Step 1. Perform a Precheck for Your Current CloudBlue Commerce Installation

Before upgrading CloudBlue Commerce to version 21.0, you need to make sure that your current CloudBlue Commerce installation can be upgraded. The precheck utility scans your current CloudBlue Commerce installation and detects issues that must be fixed before the upgrade. Note that the utility itself does not fix errors.

To meet your upgrade timeline, we strongly recommend that you launch the precheck procedure in advance.

To perform the precheck, complete these steps:

- On the CloudBlue Commerce management node, contact support to obtain the installation precheck archive and extract all files to a directory.

-

Launch the precheck with this command:

python precheck.py

- Follow the instructions of the utility and fix the reported errors.

-

When the precheck is finished, view the

precheck-report-%DATE%.txtfile in the/var/log/pa/prechecksdirectory on the management node. It contains all errors found by the utility.Note: To view the report, use the UTF-8 encoding.

Step 2. Perform a Precheck for Your CloudBlue Commerce Databases

Before upgrading CloudBlue Commerce to version 21.0, you need to make sure that the OSS and BSS databases of your current CloudBlue Commerce installation can be upgraded. The precheck utility scans your current CloudBlue Commerce installation and detects issues that must be fixed before the upgrade. Note that the utility itself does not fix errors.

To meet your upgrade timeline, we strongly recommend that you launch the precheck procedure in advance.

To perform the precheck, complete these steps:

- Prepare a Docker host according to Database Precheck Requirements.

- Prepare input data according to Database Precheck Input Data and save it to the

/opt/db_precheck_datadirectory on the Docker host.Note: Use the

--precheck-data-dir <PATH>parameter to specify a custom location for the input data. - Download, or contact support to obtain the database precheck archive and extract all files from the archive to a directory on the Docker host.

- On the Docker host, launch the precheck:

python db_precheck.py

Note: If you use a proxy server, ensure that your proxy server is configured correctly to be used by Docker. For additional information, please refer to the Docker documentation.

-

Follow the instructions of the utility and fix the reported errors. When the database precheck is finished, it shows the location of the

db_precheck.tgzarchive file, which includes logs and reports, in the/var/log/padirectory on the Docker host. The archive file contains all errors found by the utility and a summary report in thedb_precheck_report.jsonfile.To fix the errors from the summary report, please refer to this KB article. If you cannot fix the errors, please send the

db_precheck.tgzfile to CloudBlue Commerce support before upgrading CloudBlue Commerce.

Database Precheck Requirements

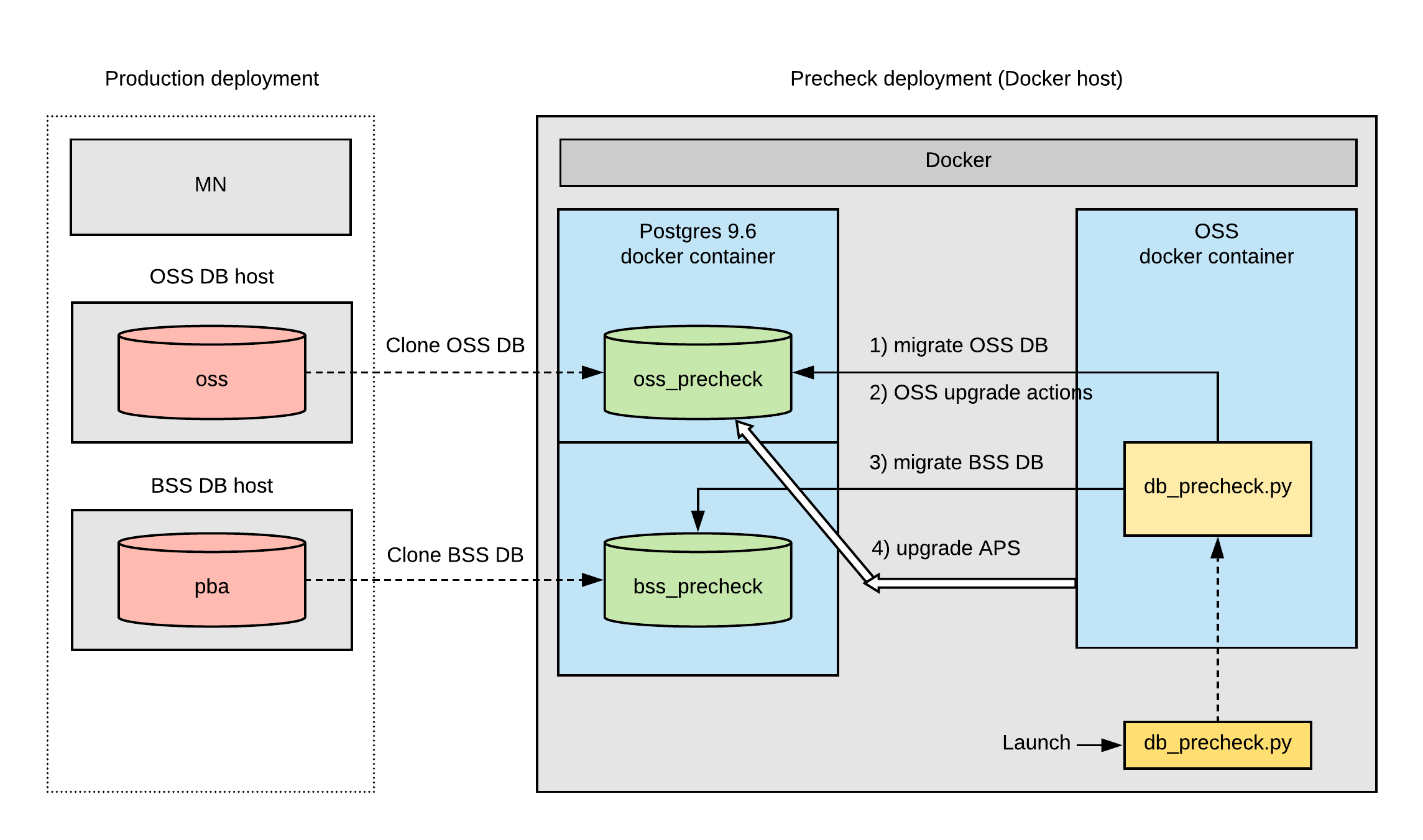

According to the database precheck model, one VM with Docker (a Docker host) and access to the Internet is required outside your production network. Internet access is required to download Docker images from repositories to use them when performing a database precheck.

Note: The database precheck tool uses iptables to isolate the database checking process from the external environment. Also, the database precheck tool uses special names for database clones: oss_precheck for the OSS database clone and bss_precheck for the BSS database clone.

The OS requirements for that Docker host are:

- CentOS 7.4 (x64)

- RHEL 7.4 (x64)

The software requirements for that Docker host are:

- Python 2.7

- Docker 17.0.7 (or later); please refer to the Docker documentation for more details.

- iptables

The minimum hardware requirements for that Docker host are:

- CPU: 4 core or more (with 2.4 GHz or higher)

- RAM: 16 GB or more

- Disk: 1 TB or more

Note: This disk must be capable of storing the OSS and BSS databases with their dumps.

The maximum hardware requirements for that Docker host are:

The timezone requirements for the Docker host are:

-

The Docker host timezone must be the same as the timezone of the database servers you want to perform the precheck for. To ensure the correct timezone on your Docker host, please refer to this article.

The Database Precheck Model

Database Precheck Input Data

Before running the database precheck utility, you must prepare the following input data:

Important: For your PostgreSQL databases, use the pg_dump utility with the same version as the database version. If your PostgreSQL version is less than 11.0, you must use the pg_dump utility with version 11.0 or higher for creating database dumps.

PostgreSQL

Important:

- All files in the input data directory must have the following access permission: “read” (including “other” users).

- All directories in the input data directory must have the following access permissions: “read” and “execute” (including “other” users).

-

An OSS database dump named

pg_dump-oss.dump. On your OSS DB server, run the following command to create the dump:su - postgres -c 'pg_dump --format=directory --no-owner --jobs 4 -U postgres oss -f pg_dump-oss.dump'

-

An OSS database dump of global objects named

pg_dump-oss.globals.dump. On your OSS DB server, run the following command to create the dump:su - postgres -c 'pg_dumpall --globals-only --no-owner -U postgres > pg_dump-oss.globals.dump'

-

A BSS database dump named

pg_dump-bss.dump. On your BSS DB server, run the following command to create the dump:su - postgres -c 'pg_dump --format=directory --no-owner --jobs 4 -U postgres pba -f pg_dump-bss.dump'

-

A BSS database dump of global objects named

pg_dump-bss.globals.dump. On your BSS DB server, run the following command to create the dump:su - postgres -c 'pg_dumpall --globals-only --no-owner -U postgres > pg_dump-bss.globals.dump'

- A copy of the

APSdirectory located in the/usr/local/pemdirectory on your OSS MN. - A copy of the

credentialsdirectory located in the/usr/local/pemdirectory on your OSS MN.

Azure Database for PostgreSQL

Important:

- All files in the input data directory must have the following access permission: “read” (including “other” users).

- All directories in the input data directory must have the following access permissions: “read” and “execute” (including “other” users).

-

An OSS database dump named

pg_dump-oss.dump. -

An OSS database dump of global objects named

pg_dump-oss.globals.dump. -

A BSS database dump named

pg_dump-bss.dump. -

A BSS database dump of global objects named

pg_dump-bss.globals.dump. - A copy of the

APSdirectory located in the/usr/local/pemdirectory on your OSS MN. - A copy of the

credentialsdirectory located in the/usr/local/pemdirectory on your OSS MN.

Use the following instructions to prepare the necessary database dumps:

- Log in to your OSS MN as a user with sudo privileges that allow that user to use

pg_dump. -

Create a directory for dumps and data:

# mkdir dbprecheck_data

-

In the directory intended for dumps and data, create a script named

get_dumps.shwith the following contents:Copy#!/bin/bash

FILE="/usr/local/pem/etc/Kernel.conf"

[ ! -f $FILE ] && echo "$FILE does not exist. Are you sure you are on MN?" && exit 1

source $FILE

echo "Creating OSS database dump....."

PGPASSWORD="$dsn_passwd" pg_dump --format=directory --no-owner --jobs 4 -h "$dsn_host" -U "$dsn_login" "$dsn_db_name" -f pg_dump-oss.dump

status=`echo $?`

[ "$status" -eq 0 ] && echo "oss database dump created: ./pg_dump-oss.dump" || exit 1

echo "Creating BSS database dump....."

PGPASSWORD="$bss_db_passwd" pg_dump --format=directory --no-owner --jobs 4 -h "$bss_db_host" -U "$bss_db_user" "$bss_db_name" -f pg_dump-bss.dump

status=`echo $?`

[ "$status" -eq 0 ] && echo "bss database dump created: ./pg_dump-bss.dump" || exit 1

echo "Creating OSS database globals dump......"

read -p "Enter pgadmin password for OSS database postgres azure service: " -s pgadmin_passwd

echo

PGPASSWORD="$pgadmin_passwd" pg_dumpall --globals-only -h "$dsn_host" -U pgadmin@"${dsn_host%%.*}" > pg_dump-oss.globals.dump

status=`echo $?`

[ "$status" -eq 0 ] && echo "OSS database globals dump created: ./pg_dump-oss.globals.dump" || exit 1

echo "Creating BSS database globals dump......"

read -p "Enter pgadmin password for BSS database postgres azure service: " -s pgadmin_passwd

echo

PGPASSWORD="$pgadmin_passwd" pg_dumpall --globals-only -h "$bss_db_host" -U pgadmin@"${bss_db_host%%.*}" > pg_dump-bss.globals.dump

status=`echo $?`

[ "$status" -eq 0 ] && echo "BSS database globals dump created: ./pg_dump-bss.globals.dump" || exit 1 -

Execute these commands:

# cd dbprecheck_data

# chmod a+x get_dumps.sh

# ./get_dumps.shNote: The

get_dumps.shscript asks for thepgadminpassword.

The following dump files appear inside your dbprecheck_data folder:

pg_dump-bss.dumppg_dump-bss.globals.dumppg_dump-oss.dumppg_dump-oss.globals.dump